Building a High-Performance Backend for Real-time Patient Data Streaming

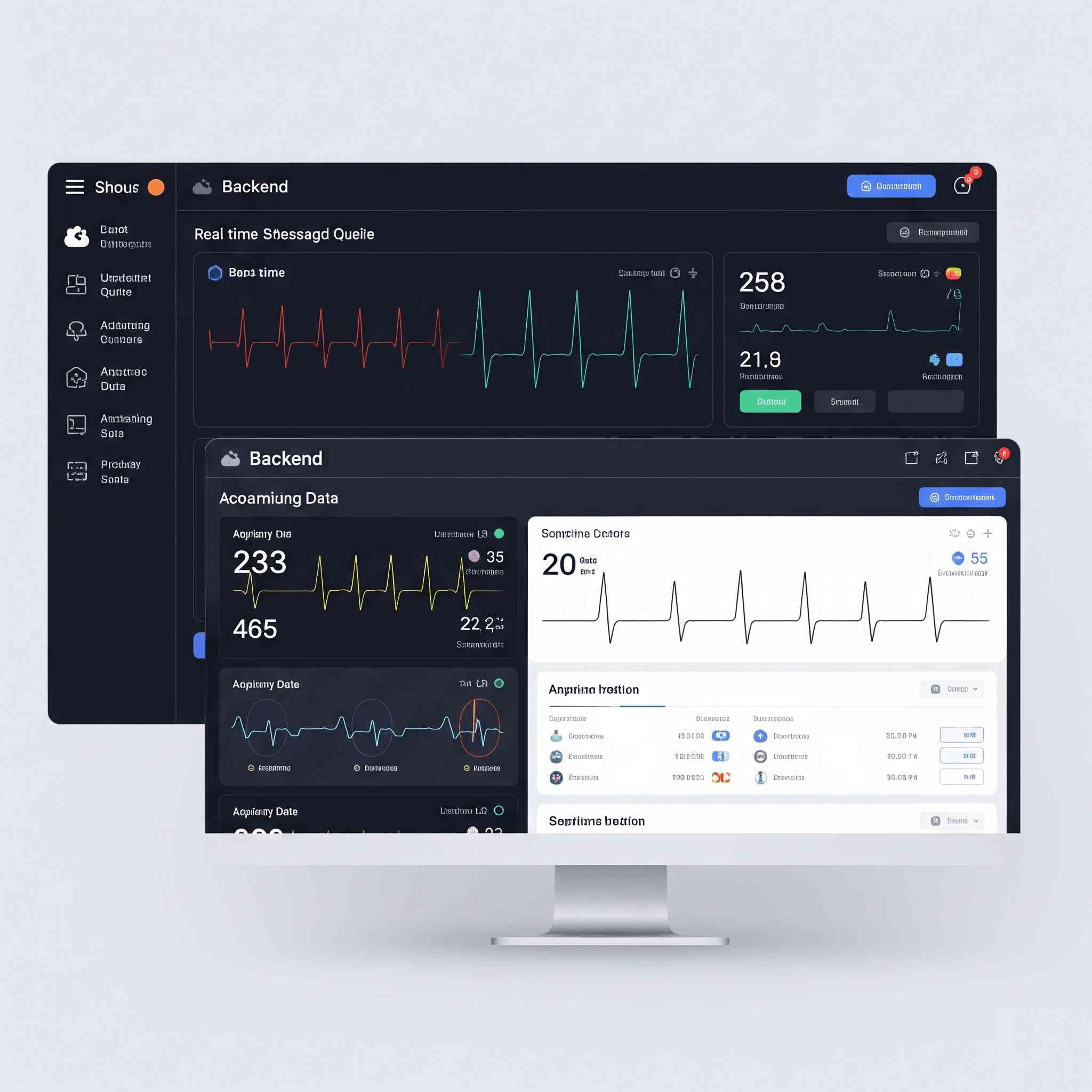

LifeSignals Inc.: Building a High-Performance Backend for Real-time Patient Data Streaming

This case study highlights Triophore’s expertise in developing robust, scalable backend solutions for critical healthcare data. The focus is on providing LifeSignals Inc. with the infrastructure required for real-time streaming of ECG and other vital patient data, a cornerstone for advanced patient monitoring systems.

The Challenge: Low-Latency, High-Scalability Real-time Data Streaming

LifeSignals Inc. required a sophisticated backend service capable of handling a continuous flow of highly sensitive and time-critical patient data. The problem statement outlines two paramount requirements:

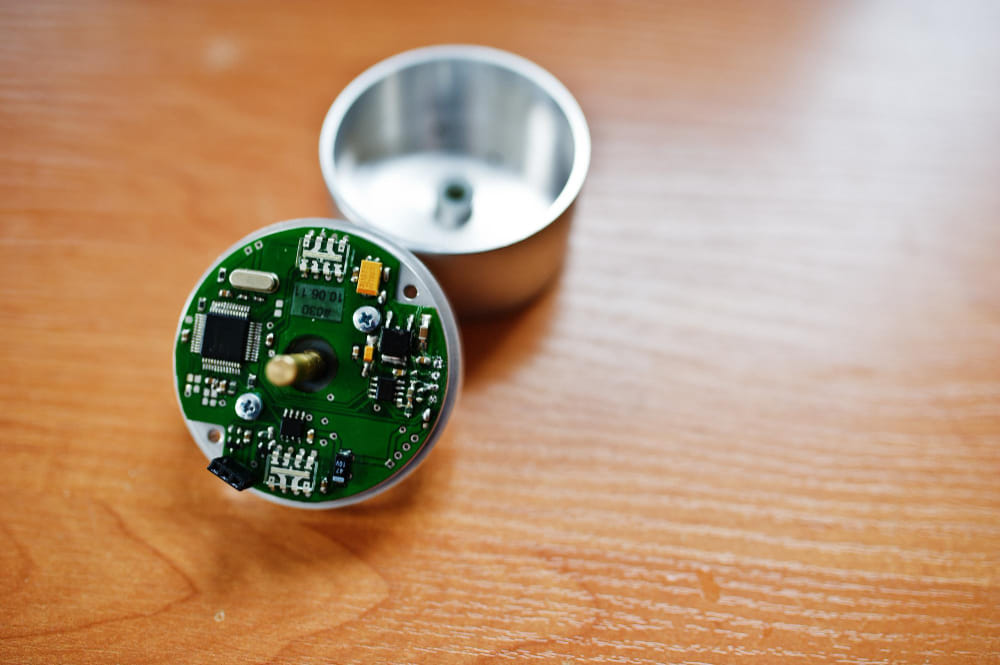

Real-time ECG and Other Patient Vitals: This refers to data streams from devices like ECG patches (heart activity), and potentially other sensors measuring parameters like heart rate, respiration rate, oxygen saturation, and temperature. The “real-time” aspect implies that data must be processed and made available for analysis or display with minimal delay.

Low Latency: For medical monitoring, low latency is absolutely crucial. Any significant delay in data transmission could mean delayed alerts for critical events, hinder immediate clinical intervention, or compromise the accuracy of real-time diagnostics. This demands a system optimized for speed at every stage, from data ingestion to delivery.

High Scalability: The service needed to accommodate a large and potentially rapidly growing number of connected patients and devices. This means the backend must be able to handle a massive volume of concurrent data streams without degrading performance, allowing LifeSignals to expand its reach and service more users seamlessly.

Handling Large Volume of Traffic and Data: Real-time vital sign monitoring generates a continuous stream of data points. The backend service needed to efficiently process, route, and store this substantial and constant influx of information without becoming a bottleneck.

The Solution: A Custom-Engineered Streaming Powerhouse

Triophore responded to these stringent requirements by designing, developing, testing, and delivering a bespoke backend streaming server. The solution was specifically engineered to meet the high demands of medical data:

Scalable Architecture: The server was built with scalability as a core architectural principle. This likely involved a distributed design, allowing it to be easily expanded by adding more resources (e.g., servers, instances) as the data volume and number of concurrent connections increase. This ensures the service can grow with LifeSignals’ needs without requiring a complete re-architecture.

Lightweight Design: A “lightweight” server implies optimized code, efficient resource utilization, and minimal overhead. This design choice directly contributes to lower latency and better performance, especially when dealing with a high volume of continuous data streams. It maximizes the server’s capacity for handling concurrent connections.

Low Latency Optimization: Achieving low latency involved meticulous engineering. This could include using efficient communication protocols, optimizing data serialization and deserialization, minimizing processing steps, and potentially employing techniques like direct memory access or highly optimized I/O operations to reduce delays.

Robust Data Handling: The server was “specially designed to handle a large volume of traffic and data,” indicating advanced mechanisms for queuing, processing, and routing continuous data streams reliably. This includes ensuring data integrity and preventing data loss even under peak loads.

End-to-End Involvement and Ongoing Support: Triophore’s full lifecycle involvement from design to deployment, coupled with ongoing maintenance and support, ensures the backend service remains stable, secure, and performs optimally in the long term, adapting to evolving requirements and technologies.

The Tech Stack: Fueling Real-time Performance and Flexibility

The choice of technologies for this streaming backend underscores a focus on high performance, real-time communication, and flexible deployment:

WebSockets: This protocol provides a full-duplex communication channel over a single TCP connection, allowing for persistent, bi-directional communication between the client (e.g., mobile app) and the server. Unlike traditional HTTP, WebSockets maintain an open connection, which is ideal for truly real-time data streaming where the server needs to push updates to clients instantly without repeated polling.

Node.js: A JavaScript runtime built on Chrome’s V8 JavaScript engine, Node.js is renowned for its non-blocking, event-driven architecture. This makes it exceptionally well-suited for high-concurrency, I/O-bound applications like streaming servers, where it can handle a large number of simultaneous connections efficiently without performance degradation.

MQTT: A lightweight, publish-subscribe messaging protocol, MQTT is designed for constrained devices and low-bandwidth, high-latency, or unreliable networks. It’s highly effective for collecting data from remote IoT devices, such as the ECG patches or other vital sign monitors. MQTT might be used for the initial ingestion of data from the devices into the backend, which is then processed and streamed out via WebSockets to end-user